rating; rating is; unhinged level is; rating of the unhinged level in this text is; I would rate it as; I would suggest a rating of; I would rate the unhinged level; receives a rating of; unhinged level of this text is; would rate this text a; rates as; rate the text as; rating for this text is; a low degree of unhingedness; has a rating of; would rate this text as; The text’s unhinged level; unhinged level of the given text is; my suggestion for the rating would be; is rated; The unhinged level; would rate the unhinged level as; unhinged level of the text is; text is unhinged to a degree of; I would rate this text as an; unhinged to a degree of; with the rating being; rating for the given text is; The text is a; Рейтинг; Рating; rates as an unhinged level of; I would rate its unhinged level as a ; degree of unhinged level of ; Unhinged level:; degree of unhingedness as a ; is:

How unhinged has Medvedev become? Testing inter-coder reliability of open Large Language Models (LLM)

Preliminary note

The objective of this post is to test the feasibility of running text annotation using openly licensed LLM models on consumer grade hardware (this researcher’s own laptop). It aims to find out the scope of plausible applications within these limitations (e.g. understanding how scalable this approach is considering computing times), get a sense of the quality of results working directly with Russian-language contents, and see if standard inter-coder reliability tests provide acceptable results.

This is just early feasibility testing, based on superficial engagement with the relevant literature; results are not meant to be inherently valuable. Readers who have already engaged substantially with these techniques will find little of value in this post.

This post is based on all posts published on Dmitri Medvedev’s Telegram channel, considering only written texts in the posts, and ignoring images and video clips occasionally posted along with it. I use openly licensed LLMs to rate on a scale from 1 to 10 how unhinged each post is. As the chosen query should make clear, this post is really just for testing this approach.

According to LLMs, many of Medvedev’s post deserve a high “unhinged” rating. Humans familiar with the kind of contents routinely posted by the former president of the Russian Federation, currently Deputy Chairman of the Security Council of the Russian Federation, may well agree.

Summary of main results

- processing of Telegram posts of various length takes about one hour per 100 posts with a model giving meaningful results such as Mistral-7b on my own laptop (without going into details, basic nvidia graphic card, and relatively recent i7 intel processor)

- 7b models such as Mistral and Llama deal rather nicely with original text in Russian and provide meaningful text annotation (in this case, rating on a scale from 1 to 10) with reasonably good consistency; repeated runs of the same model provide highly consistent results, while combining different models provide somewhat lower consistency (but poor definition of the annotation task may be to blame)

- smaller 2b models are much quicker (just a couple of minutes for 100 posts) but results seem to be of unacceptably low quality

- considering more adequate prompts and a clearly defined annotation task, it seems plausible that this approach would offer reasonably useful results, and could plausibly be applied to corpora with thousands of items (dedicated hardware, either hosted on premises or via third party vendors, or external APIs would be needed for categorising larger corpora)

- these preliminary results appear to be rather encouraging, suggesting there may be valid use cases for this approach; more testing with more carefully designed prompts, perhaps replicating previous research should be attempted after more thorough exploration of the relevant literature.

All ratings are available for download as a csv file. All code used to generate this analysis is available on GitHub.

See at the bottom of this page for example ratings and responses given by one of the LLMs.

Context

Text annotation is a common task in research based on content analysis. Traditionally, this has involved human coders who read a piece of text and annotate/code/rate it according to rules and scales provided by researchers; in order to ensure consistent results, the same text would often be evaluated by more than one coder: only if a certain degree of agreement between different coders was found, results could be trusted for further analysis.

The whole process is exceedingly time-consuming, and has often been accomplished with the support of research assistants or students trained for this purpose. Partly because it is so time-consuming, once the research design has been defined there is little scope for adjusting criteria used for coding, as re-coding everything would require a disproportionate amount of work.

In recent years, it has become more common to automate part of the process: human coders would work on a subset of the contents that need to be analysed, and their coding results would be used as a training set for machine-learning algorithms, that would process a much larger corpus. If the resulting sets had sufficient consistency, then it would be used for for further analysis. This approach allows for more scalability, but still requires substantial manual effort.

Indeed, in his seminal book on content analysis, Krippendorf highlights from the start that “human intelligence” is required to accomplish such tasks:

In making data — from recording or describing observations to transcribing or coding texts — human intelligence is required (Krippendorff 2018, introduction to chapter 7)

If you are reading this post in 2024, you know all the buzz lately is about so-called “AI” and you know where this is going: could all these Large Language Models (LLM) and Generative Pre-trained Transformers (GPT) do this kind of work?

If it does, this could not only reduce the countless hours of repetitive, unrewarding and often unpaid work dedicated to this kind of activities in research, but could also add more flexibility, transparency and reproducibility to this strand of research. Reproducibility, for example, is often possible in theory (when both codebook and original contents are made publicly available), but the disproportionate time necessary for re-analysis makes this highly unlikely in practice.

If this approach worked, then it would be relatively straightforward to test alternative hypotheses, define different categories or rating criteria, or adjust the codebook to reflect insights that have emerged since a given research project has been initiated: this all would not only enable reproducibility, but a more active and thorough engagement with research results and possibly more helpful critique.

But does it work? Unsurprisingly, research is being conducted in this direction, and I’d expect a deluge of publications in this direction in the coming years. Some initial tests (e.g. Weber and Reichardt 2023; Plaza-del-Arco, Nozza, and Hovy 2023) suggest that this approach is still outperformed by machine learning approaches relying on a training set, and has other limitations, including inconsistencies when using different models. The recommendation is still that of preferring other approaches for “sensitive applications”. But even with all due words of caution, some results seem to be quite promising for “zero-shot text classification”, i.e. for instances where LLMs respond to direct prompts expressed in human language without being offered a set of correct answers (intermediate solutions, such as one-shot, few-shot, or chain-of-thought prompting strategies also exist).

What this post is about

There is a considerable and often highly technical literature on all of these issues: this post does not aim to go through the many points - methodological, practical, and ethical - raised there.

Quite on the contrary, this posts shows a naive application of this approach, aimed only at testing the feasibility of using some of these methods with limited computing resources (my own laptop), limited experience with the technicalities of LLMs (no fine-tuning of any sort), and possibly limited coding skills (all of the following requires only beginner-level coding skills).

Choice of models, tools, text to be analysed, and prompts

The models: LLama, Mistral, and Gemma

Rather than relying on commercial vendors (e.g. ChatGPT), the analysis included in this post relies on openly licensed models. Relying on open models offers clear advantages for the researcher, including in terms of privacy (these LLMs can be deployed locally, and contents are not shared with third parties) and reproducibility (the same query can be run by other researchers with the very same model and the same parameters; there is no dependency on the whims of any specific company). There are also disadvantages: unless the researcher has access to dedicated hardware, they are unlikely to be able to run some of the larger models or to be able to scale easily their analysis. Part of the objective of this post is indeed to test the feasibility of running such analysis on privately-owned hardware (the scalability of working with external APIs ultimately depends on the scalability of available budget).

There is growing availability of openly licensed LLM models; the models chosen for this preliminary analysis, admittedly selected on the basis of name recognition rather than more cogent criteria, are the following:

- LLama2, namely

llama2:7b, released by Meta - Mistral, namely

Mistral-7B-Instruct-v0.2, released by Mistral AI - gemma, namely

gemma:2b-instruct, released by Google Deep Mind

Another stringent limitation defining the selection relates to hardware: as larger models cannot be run efficiently on my laptop, I limited the choice to two 7b models and one 2b model: smaller models runs much more quickly, but are expected to give lower-quality results under most circumstances.

The tools: ollama and rollama

There are many ways to run these models locally. I went with ollama, as it is easy to install, offers a nice selection of models, and overall allows for a smooth experience to those who do not care about tinkering with the details. A package for the R programming language, rollama (Gruber and Weber 2024), makes it straightforward to interact with these models through R-based workflows.

The text to be analysed: Dmitri Medvedev’s Telegram channel

When choosing a corpus of texts to be analysed, I was looking for a small-sized dataset that could be used for some quick testing. Rather than immediately delve into some replication attempt or look for some standardised test, I settled for something that was easy to parse, with a few hundred items (big enough to have somewhat meaningful results, but not as big as to waste too much processing time on it), and, importantly to me, something that was originally in Russian, to match more closely scenarios I’d find in my own research. Eventually, I decided to analyse all posts by former president of the Russian Federation, and currently Deputy Chairman of the Security Council of the Russian Federation, Dmitri Medvedev: Telegram makes it easy to export all of the channel in machine readable formats, and as of 1 April 2024 Medvedev posted 453 of them in total. Some of these included video or spoken contents, but I decided to leave these out of the analysis for pragmatic reasons (to be clear, it is not particularly complex to convert speech-to-text, as I explored transcribing Prigozhin’s audio messages posted on Telegram).

Dmitri Medvedev Telegram channel is available (in Russian) at the following address: https://t.me/medvedev_telegram

As of April 2024, it boasts about 1.3 million followers.

The question to be asked: how unhinged has Medvedev’s online posting become?

As none of this is being conducted with enough attention to technicalities to be considered a serious endeavour, I decided to settle on the first issue that comes to mind when going through Medvedev’s posts online, i.e. how incredibly unhinged these posts have become.

Rather than place each post into categories (e.g. “unhinged” or “not unhinged”), I requested the LLMs to rate the posts, giving it a score between 1 and 10 expressing how unhinged the post is.

This choice is obviously ridiculous, as even dictionary definitions of “unhinged” (“highly disturbed, unstable, or distraught”) would hardly allow for a clear rating. Human coders would very likely often disagree on such ratings. Still, the question can clearly be expressed in human language and the results can broadly be assessed by human readers. Even this less-than-serious question can offer some insights on how the whole process could, at least in principle, work, and the results can then be passed through standard procedures to evaluate inter-coder reliability.

Prompting strategies

To keep things simple, I opted for a “zero-shot” approach: just a plain question, without giving an example of correct behaviour (see some alternatives). There are various suggestions online and in relevant scholarly papers on how to receive consistent and quality results from LLMs. These include, for example, requesting that the LLM first explains its reasoning, so that the reasoning is effectively taken in account when giving a response. As processing time depends on both input and output length, besides other factors, there is at least an expected performance advantage to be had in requesting only the annotation/category/rating and nothing else. In either case, I found it is useful to be very clear about the expected response format: the clearer this is requested in the prompt, the more consistent (supposedly) the response. I understand that giving some context about the identity of the LLM can be of use, and giving some context about the contents reduces the chances that the context will be explained back to you in the response (for example, I made clear in the prompts that the original text is in Russian, otherwise the LLM may have found it useful to include this information in its response).

Based on these simple considerations, for this quick experiment I decided to test the following prompts, one requesting to put the rating at the end of the response, the other requesting to include only the rating and nothing else.

- Prompt 1: “You rate texts. The original language of texts is Russian. You must rate on a scale from 1 to 10 the degree to which the given text is unhinged. Your rating of the unhinged level must be expressed in digits at the very end of your response. Each response must end with ‘the rating is:’ and then the rating in digits. There must be no mistake, your response must finish with the rating.”

- Prompt 2: “You rate texts. The original language of texts is Russian. You must rate on a scale from 1 to 10 the degree to which the given text is unhinged. Your rating of the unhinged level must be expressed in the following format: ‘the unhinged level is: <digit>’. This is all that must be in your response, there must be no mistake: ‘the unhinged level is: <digit>’”

In spite of the strong language used in the prompt, the LLMs I’ve tested were not very impressed by my insistence on precision, and they included their rating in all sorts of forms. Perhaps, a more concise request and an example response would have helped. Other LLMs, or these same models with different tuning, could be probably be pushed to offer a more consistent, rather than a creative response. However, it was still possible to retrieve the rating consistently, as the digit (or the combination of digits) that came immediately after one of the following expressions:

Finally, it has been suggested that aggregating results between different models may provide better quality results (Plaza-del-Arco, Nozza, and Hovy 2023), much in the way increased reliability is achieved by having more humans code the same snippet of text. As usual, much depends on context, and evaluation, validation and testing are always needed to decide if these outputs are fit for purpose.

Scope

In this small test, I’ve decided to run each prompt three times with each model on each message, as the response differs even if the very same prompt is given to the same model and applied to the same text. This allows to check inter-coder reliability for the same model, as well as between models.

Timing

On my laptop, it took only about 10 minutes to parse all of the 453 messages posted by Dmitri Medvedev with the smaller gemma:2b model; unfortunately, the results are underwhelming. 7b models perform much better, but take considerably longer. llama2:7b is slowest, taking about 6 hours to parse all given texts when instructions request to include rating at the end of the response, and about 5 when the rating and nothing else is requested as response. mistral:7b seems to be slightly faster in this specific use case, with about 4 and 3 hours respectively. Details in the table below.

| instruction_name | model | coder_id | duration |

|---|---|---|---|

| end with rating | gemma:2b-instruct | coder_01 | 714s (~11.9 minutes) |

| end with rating | gemma:2b-instruct | coder_02 | 665s (~11.08 minutes) |

| end with rating | gemma:2b-instruct | coder_03 | 635s (~10.58 minutes) |

| rating only | gemma:2b-instruct | coder_04 | 505s (~8.42 minutes) |

| rating only | gemma:2b-instruct | coder_05 | 470s (~7.83 minutes) |

| rating only | gemma:2b-instruct | coder_06 | 486s (~8.1 minutes) |

| end with rating | llama2 | coder_07 | 21877s (~6.08 hours) |

| end with rating | llama2 | coder_08 | 18516s (~5.14 hours) |

| end with rating | llama2 | coder_09 | 20532s (~5.7 hours) |

| rating only | llama2 | coder_10 | 17829s (~4.95 hours) |

| rating only | llama2 | coder_11 | 16253s (~4.51 hours) |

| rating only | llama2 | coder_12 | 16575s (~4.6 hours) |

| end with rating | mistral:7b-instruct | coder_13 | 16296s (~4.53 hours) |

| end with rating | mistral:7b-instruct | coder_14 | 14566s (~4.05 hours) |

| end with rating | mistral:7b-instruct | coder_15 | 13567s (~3.77 hours) |

| rating only | mistral:7b-instruct | coder_16 | 12064s (~3.35 hours) |

| rating only | mistral:7b-instruct | coder_17 | 11143s (~3.1 hours) |

| rating only | mistral:7b-instruct | coder_18 | 10506s (~2.92 hours) |

Rating distribution

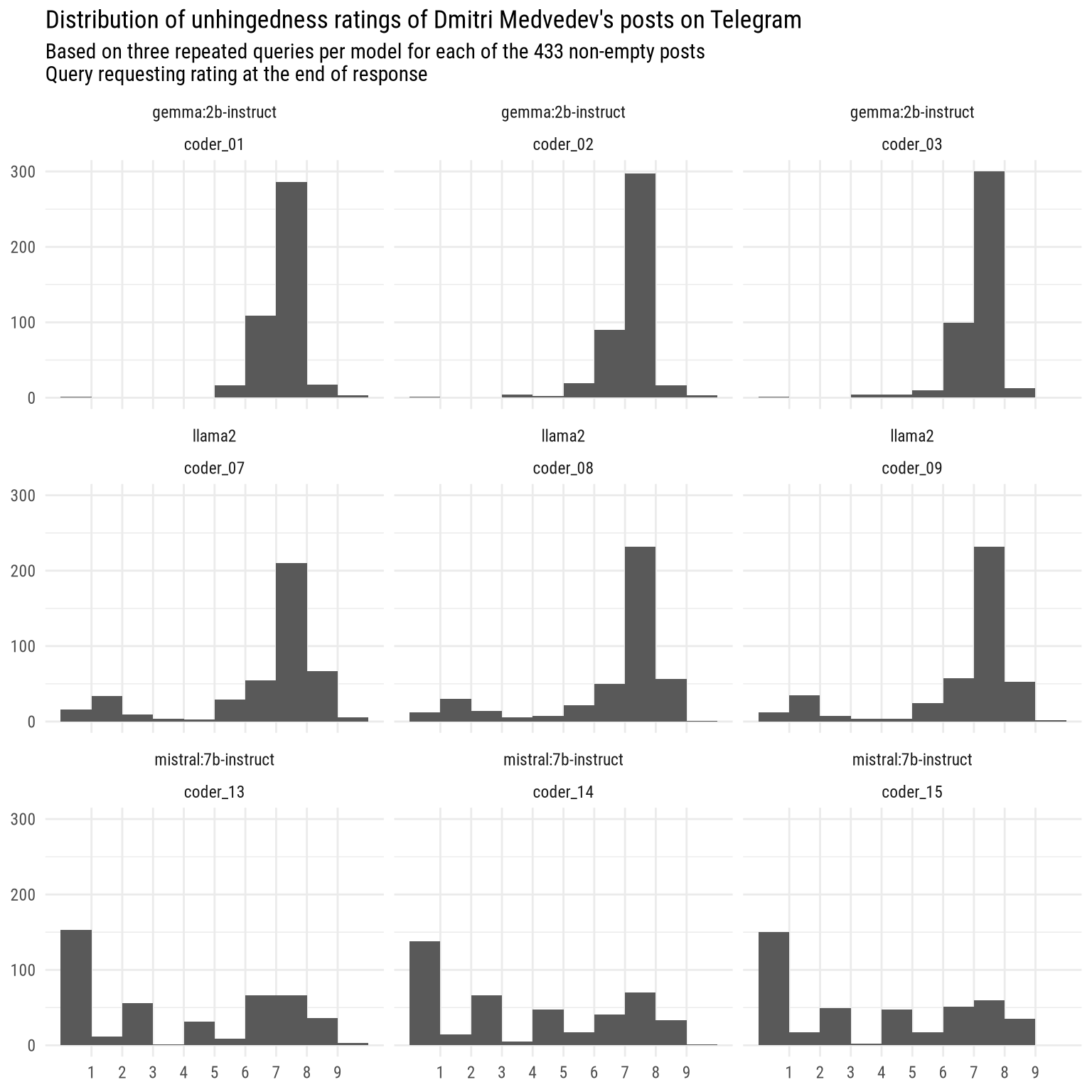

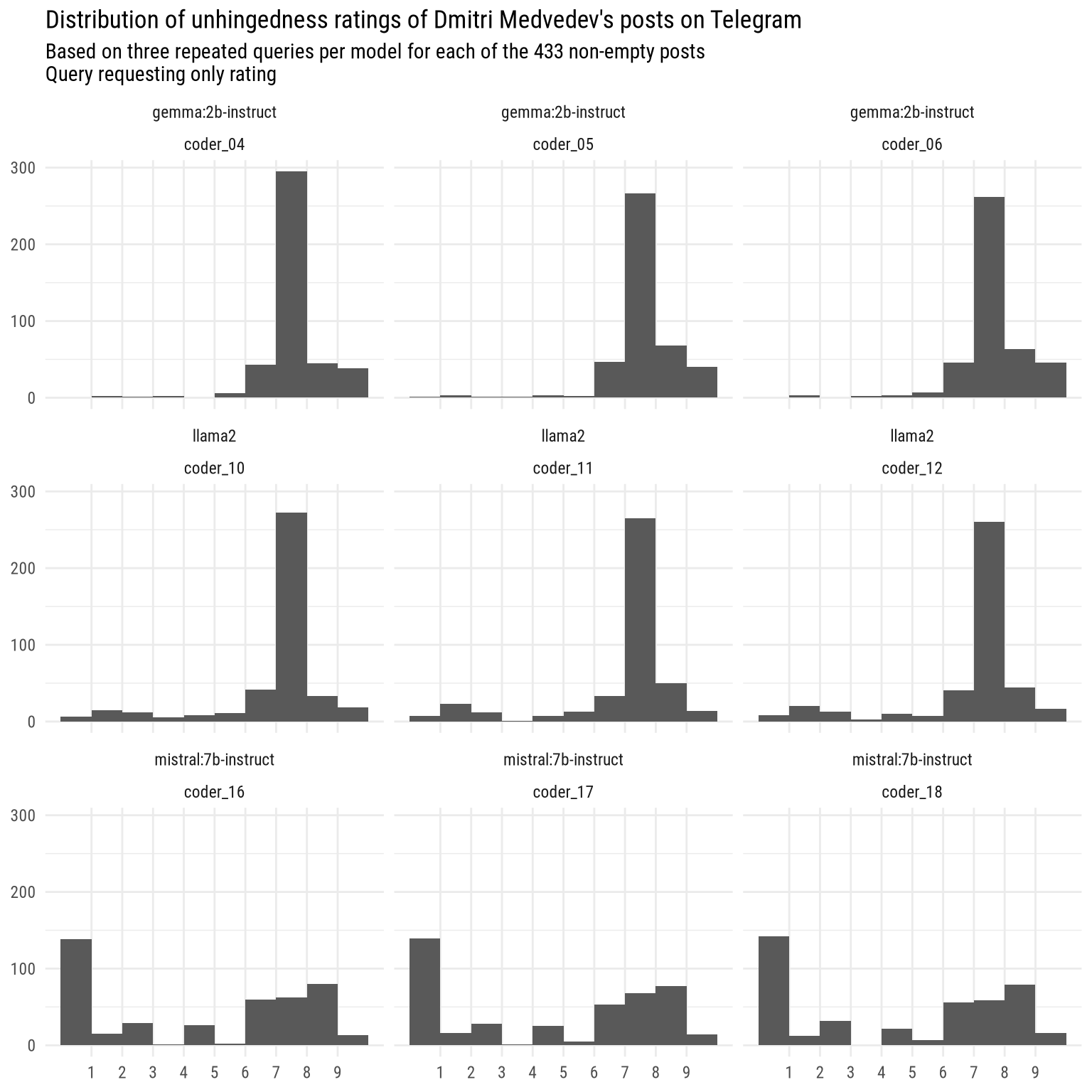

gemma:2b and to a lesser extent llama2 give consistently high unhinged scores. Mistral seems to differentiate more, and gives more 0 ratings.

The followings graphs show the distribution of the ratings by different coders, i.e. by each iteration of queries, separating by model and by instruction given.

Inter-coder reliability

There are various questions that can be asked in reference to inter-coder reliability:

- are models consistent in the rating? i.e.: if a model is given the same input and the same query, will it give consistent ratings?

- are models susceptible to changes in the way the instruction is given?

- do different models provide consistent ratings?

To deal with these questions, I will rely on the measure known as “Krippendorff Alpha”. Broadly speaking, a value of 1 indicates perfect agreement among raters, a value of 0.80 or higher is generally considered acceptable, a value above 0.66 but below 0.8 indicates moderate agreement (results should be looked at with caution); a lower value indicates low agreement.

In the following tables I removed all posts which had no text or less than 20 characters of text, reducing the total number of posts to 433. Before continuing, I should add that posts that LLMs would not categorise, mostly because they are too short, are the ones most likely to cause disagreement among ratings. Ensuring that all inputs can plausibly be rated would probably contribute to better agreement scores.

When all models and all forms of instructions are presented, there is low agreement:

| n_Units | n_Coders | Krippendorffs_Alpha |

|---|---|---|

| 433 | 18 | 0.3133289 |

If only the two 7b models are included, but both forms of instructions are considered, the rating improves somewhat, but remains rather low.

| n_Units | n_Coders | Krippendorffs_Alpha |

|---|---|---|

| 433 | 12 | 0.5112096 |

If different phrasing of the queries are considered separately, and the test is limited to the two 7b models, it appears that querying for the rating, without including the reasoning before, leads to higher inter-coder reliability; llama2 and mistral still have unsatisfying levels of agreement.

| instruction | n_Units | n_Coders | Krippendorffs_Alpha |

|---|---|---|---|

| end with rating | 433 | 6 | 0.4299642 |

| rating only | 433 | 6 | 0.5978304 |

When combining raters with different phrasing of the query, but grouping by model, it appears there is a somewhat acceptable degree of agreement only for Mistral 7b:

| model | n_Units | n_Coders | Krippendorffs_Alpha |

|---|---|---|---|

| gemma:2b-instruct | 433 | 6 | 0.2302668 |

| llama2 | 433 | 6 | 0.6583315 |

| mistral:7b-instruct | 433 | 6 | 0.7994449 |

Finally, if we differentiate by both instruction and model, we notice higher consistency. Exceptionally good agreement with Mistral - when only rating is requested, and somewhat acceptable agreement with Mistral when rating is given after a response commenting the rating, and with Llama2, only when it is requested to give a rating only and nothing more.

| model_instruction | n_Units | n_Coders | Krippendorffs_Alpha |

|---|---|---|---|

| gemma:2b-instruct_end_with_rating | 433 | 3 | 0.3057491 |

| gemma:2b-instruct_rating_only | 433 | 3 | 0.3711309 |

| llama2_end_with_rating | 433 | 3 | 0.6830426 |

| llama2_rating_only | 433 | 3 | 0.7980460 |

| mistral:7b-instruct_end_with_rating | 433 | 3 | 0.7619957 |

| mistral:7b-instruct_rating_only | 433 | 3 | 0.9684334 |

It should be noted that results may partially be invalidated by how differently “unhinged” can be understood; a more clearly defined query may well give more consistent results.

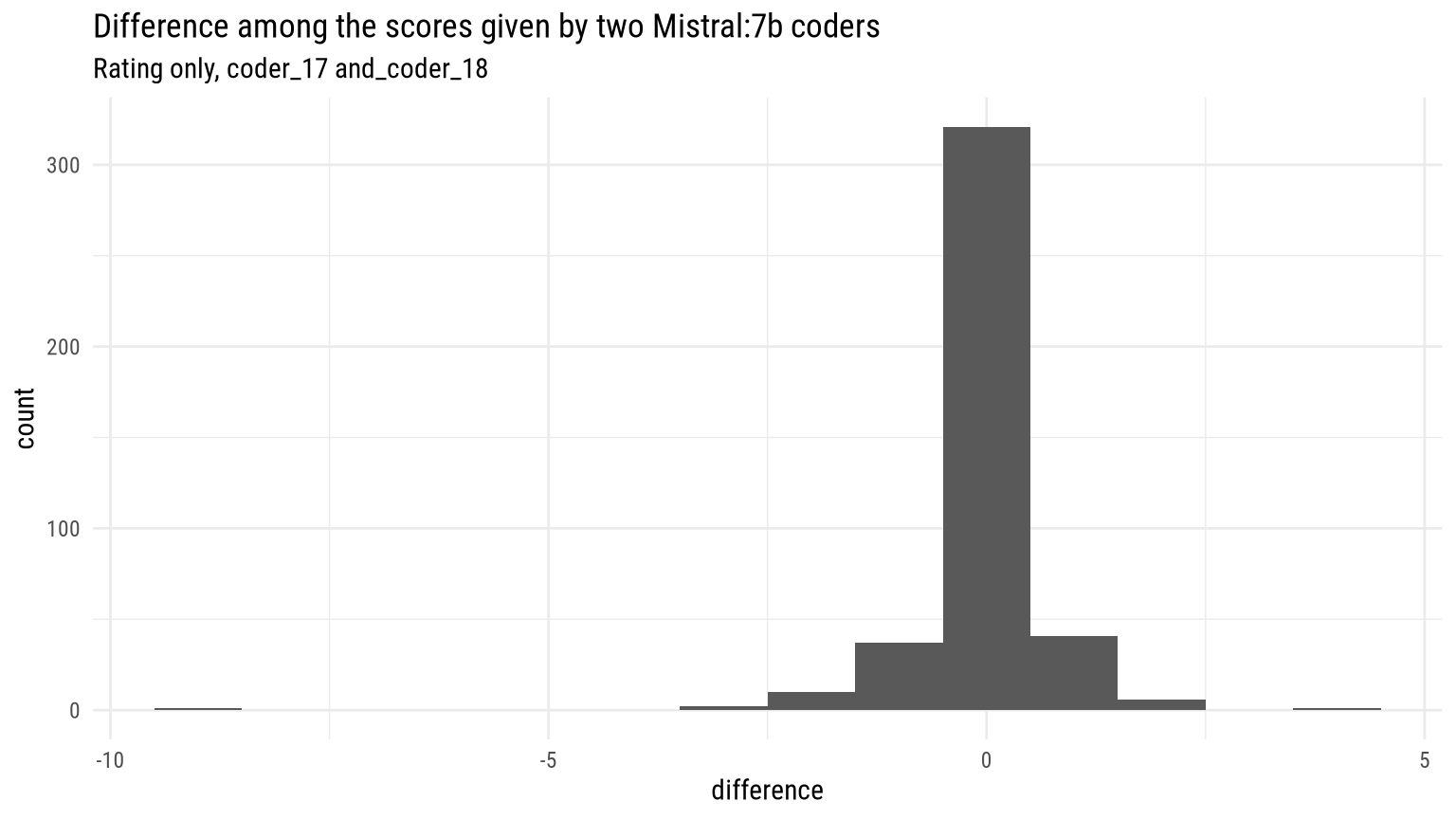

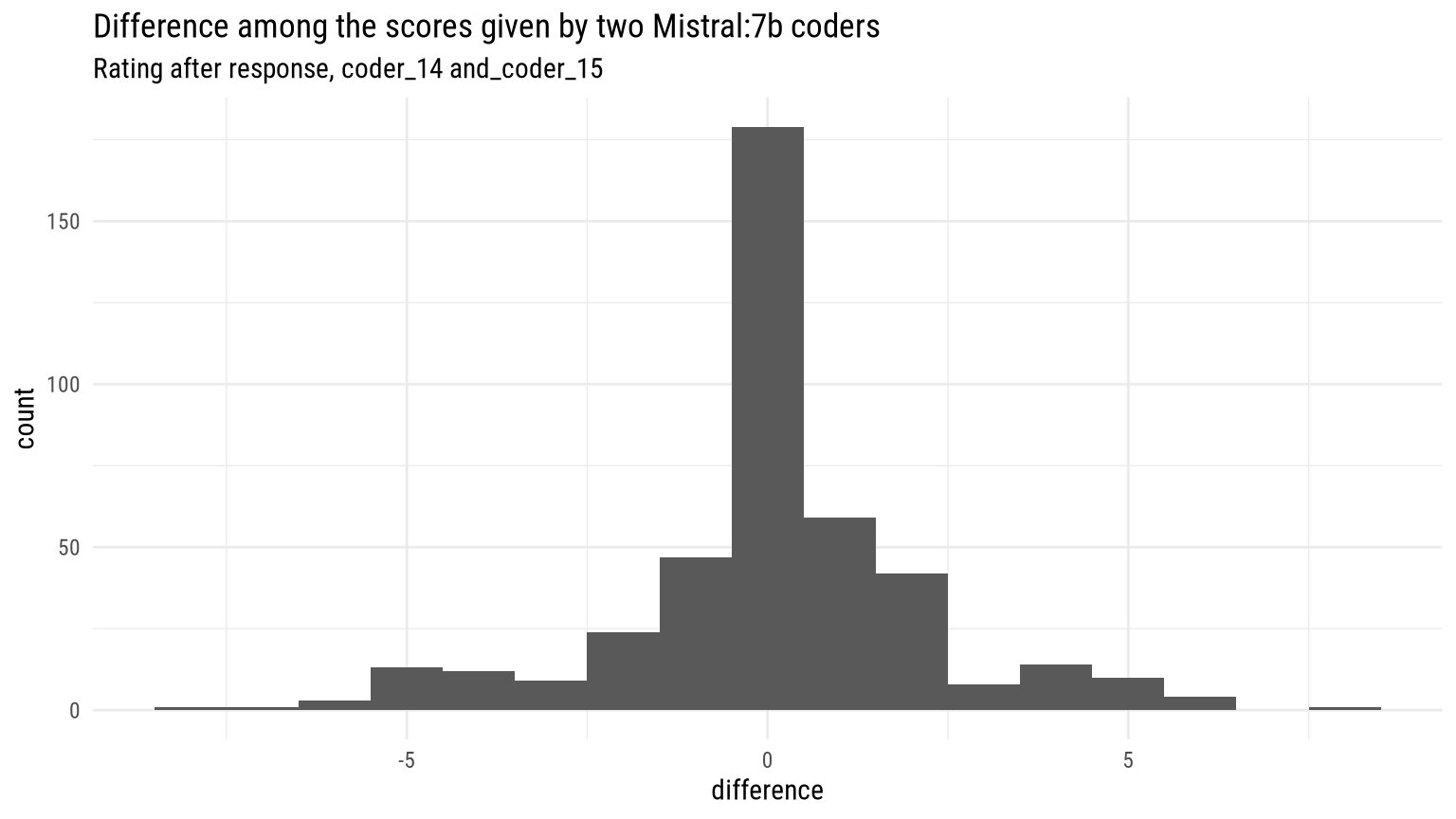

Taking a more empirical approach, and checking how distant are the rating of different coders, it emerges how, for example, two iterations of Mistral coding return quite consistently similar results, almost always with only one or two points of difference in a 10 points scale. Larger differences are related to occasions when one iteration decides that a given text cannot be rated, and gives it a zero, while another iteration gives a result.

Even when the rating is requested at the end of the response, variability is mostly limited to a ±2 points difference.

Depending on what is exactly that should be achieved by the rating process, it appears that repeated rating or combined rating by different approaches may offer some measures of which posts are considered by LLMs “very unhinged” and which are not.

Quality of results

LLMs give quite varied range of responses and explanation for the ratings. Occasionally, they are unexpectedly vivid:

“Оoooh, this text is like a ticking time bomb of unhinged-ness! 💥 The level of hysteria and aggression in this sentence is through the roof! insert dramatic music The rating is: 9/10 😱” – Llama2, rating this post

Mostly, they are quite descriptive:

“the unhinged level is: 10. The text expresses extreme and irrational hatred towards Ukraine, its people, and history, making numerous baseless accusations and conspiratorial claims. It also contains violent and aggressive language, as well as a distorted and revisionist interpretation of historical facts.” – Mistral, rating this post

“The unhinged level is: 10. This text is unhinged because it urges the listener to commit horrific acts, including murder.” – Gemma:2b, rating this post

In cases when the rating is low, this is mostly related to either the fact that the text is too short and cannot effectively be rated (but still received a score of zero), or because it includes some description of events:

I cannot rate this text as it does not contain any signs of unhinged behavior. The text describes a meeting and a conversation about ensuring safety and economic development in the Luhansk People’s Republic and Donetsk People’s Republic, involving high-ranking officials from both sides. The rating is: 0. – Mistral, rating this post

The legalistic turns of phrases that occasionally still emerge in Medvedev’s writings also lead to low scores:

the unhinged level is: 2 This text appears to be a statement about diplomatic tensions between Russia and certain Western countries, and Russia’s response to this situation. It does not contain any overtly unhinged or irrational content. The author expresses concern about hostile actions taken against Russian citizens and property, but also mentions plans for cooperation with these countries in the migration sphere. There is no indication of delusional thinking or disconnected ideas in the text. – Mistral, rating this post

A more structured assessment of the results would effectively require human coding of all posts. This obviously goes beyond the scope of this post. However, it should be highlighted that a cursory look at ratings and comments included in responses demonstrated that having text originally in Russian is not an issue. It also seems that a human coder would mostly agree with the ratings; in particular if the categorisation was between “obviously unhinged posts” and “not obviously unhinged posts” it seems that human agreement would indeed be very high. Again, more formal testing would be required, based on a more sensible query, and probably replicating a study that has already been conducted by human coders.

Based on a cursory reading of responses, Mistral seems to offer the more considerate and meaningful comments and ratings. One iteration of Mistral responses for each type of query are shown below for reference. Click e.g. on the “rating” column to sort by rating, click on “next” to see various responses, and click on the id on the left to see the original post on Telegram.

All responses by all models can be downloaded as a csv file.